Pair

Beyond interaction

Medium

Master thesis

Role

Author

Year

2024

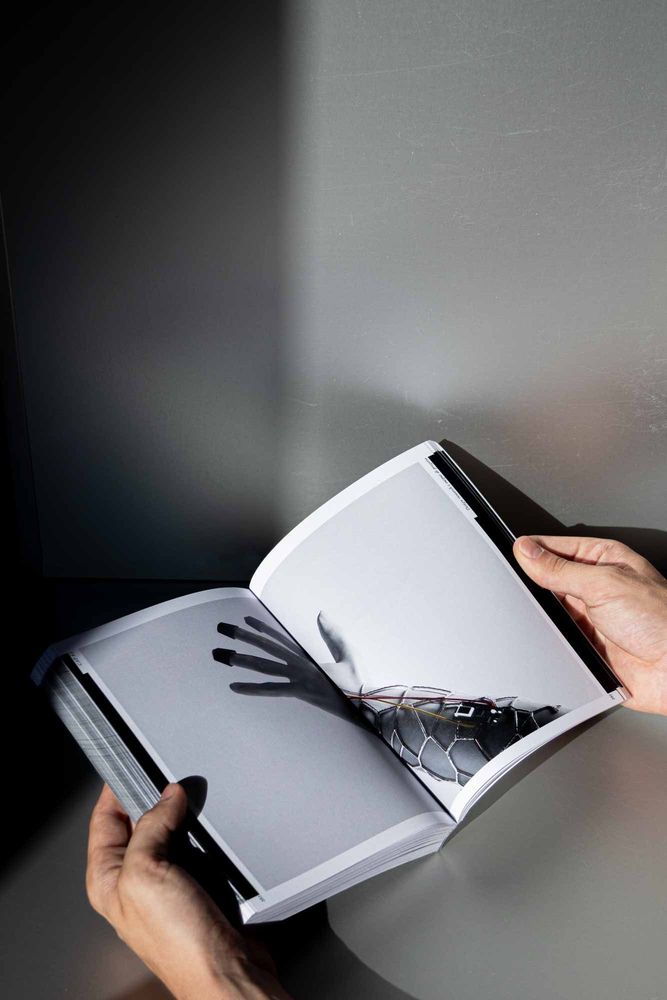

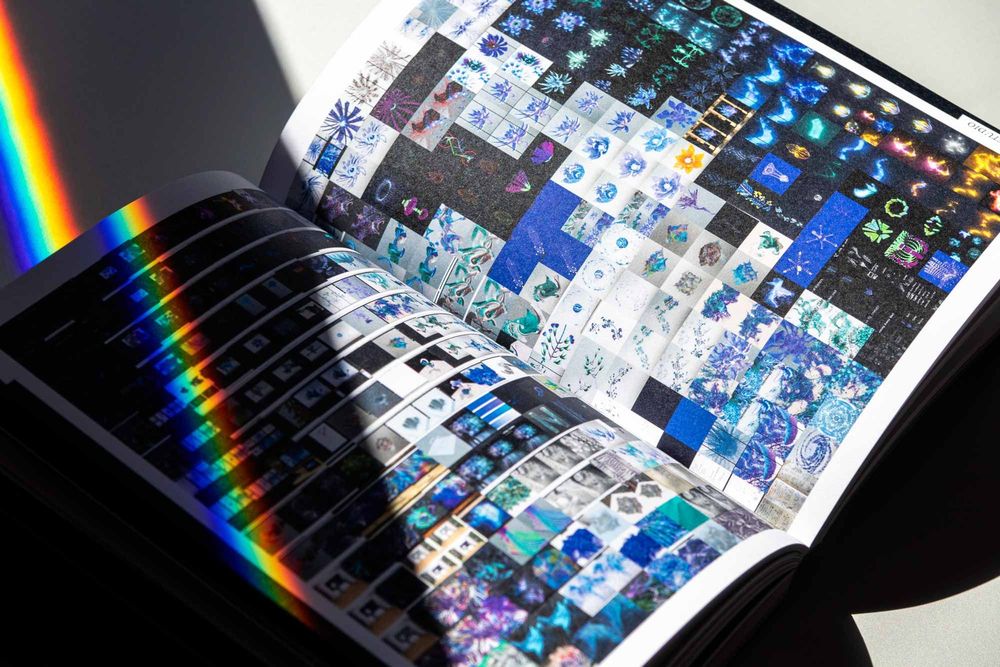

Deliverables

Editorial and speculative project about the new role of the user and the designer in a dialogue interpreted by an artificial intelligence.

The research, analysis and design path of this thesis began with a very specific question: How the role of the user evolves with the advent of artificial intelligence? This question, although on the surface may appears relatively simple, carries with it numerous concepts that in a historical moment such as the one we are experiencing nowadays deserve to be addressed. In particular, the protagonists of this question are two. The first is the figure of the user, the real protagonist of the relationship between man and machine, as well as of interaction design. The second is artificial intelligence, which carries significance, yes technological, but above all social.

The first two chapters focus on giving an overview of the topic of artificial intelligence and interaction design. Specifically, the first chapter provides a historical overview of the technical and social evolution of AI, while the second chapter introduces the increasingly close relationship between programming and design.

Fundamental to understanding how to overcome these limitations has been to study the new relationship with machines that we experience today. If we think of the person, who from being a spectator has become a user, the same can be seen with machines, which from a medium have seen themselves covered with the role of interlocutor thanks to Artificial Intelligence. The consequences of all this bring with them challenges that need to be thoroughly analyzed today, such as the ethical, moral and practical consequences, which were also analyzed with the support of interviews with experts in the field.

The research and analysis conducted so far has opened up interesting dynamics that deserved to be explored in a real project. In particular, the ever-increasing technological capability of Artificial Intelligences and the increasingly human relationship that comes with our devices has led to the idea that The next interaction will not be an interaction. No more then based on what I do, but on what I mean.

In a context where machines are able to read their users' data to create personalized and unique outputs, the interaction relationship changes. It is no longer the human being interacting with the machine, but it is the machine interacting with the human being. This creates a new dialogue between human and machine, in which the point of view is reversed and in which the narrator becomes exclusively the system, and the user instead remains a listener of what he or she is unconsciously creating. This radical reversal of dynamics goes far beyond the discipline of interaction design and opens up important questions: what role would the machines cover if they led the game? Would they still be slaves as they are today? Or would their status be elevated?

From here, the design question raise: What if the device chooses the user?

A radical reversal of viewpoint, which brings with it the need to create special classifiers to allow the system to judge in its own way without forcing itself to human logic, just as happened in the history of computer vision. Where therefore humans identify need, the computer looks for necessity; where we look for value and aesthetics, the system looks for quantity and quality of data, and so on. For the system then there is no absolute and inescapable right and wrong, rather there are systems that will have certain needs and systems that will have others, depending on they consciousness. However, to create this emotional bridge between the user and the system, it is necessary to create something that actually can read the user, get to know him or her and connect with the user. This is how PAIR was born.

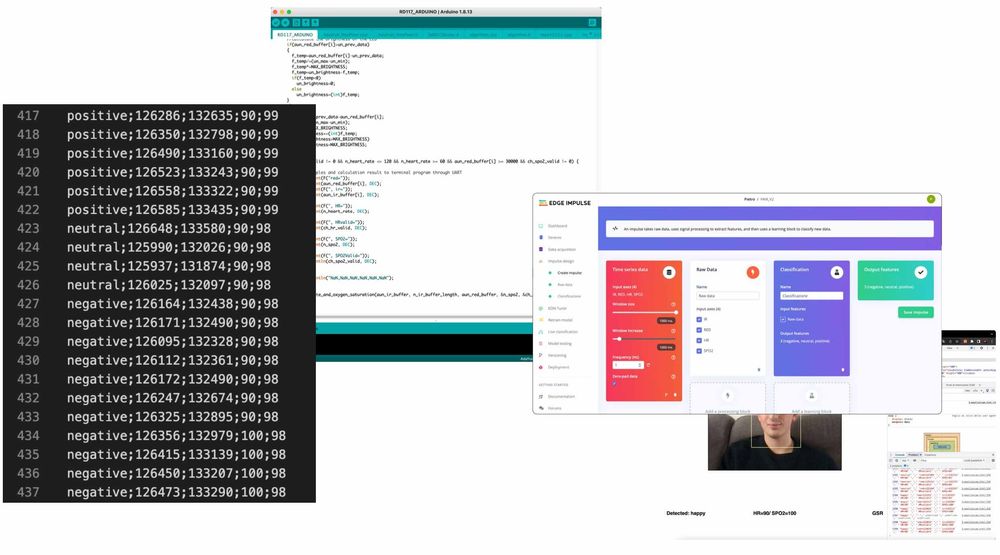

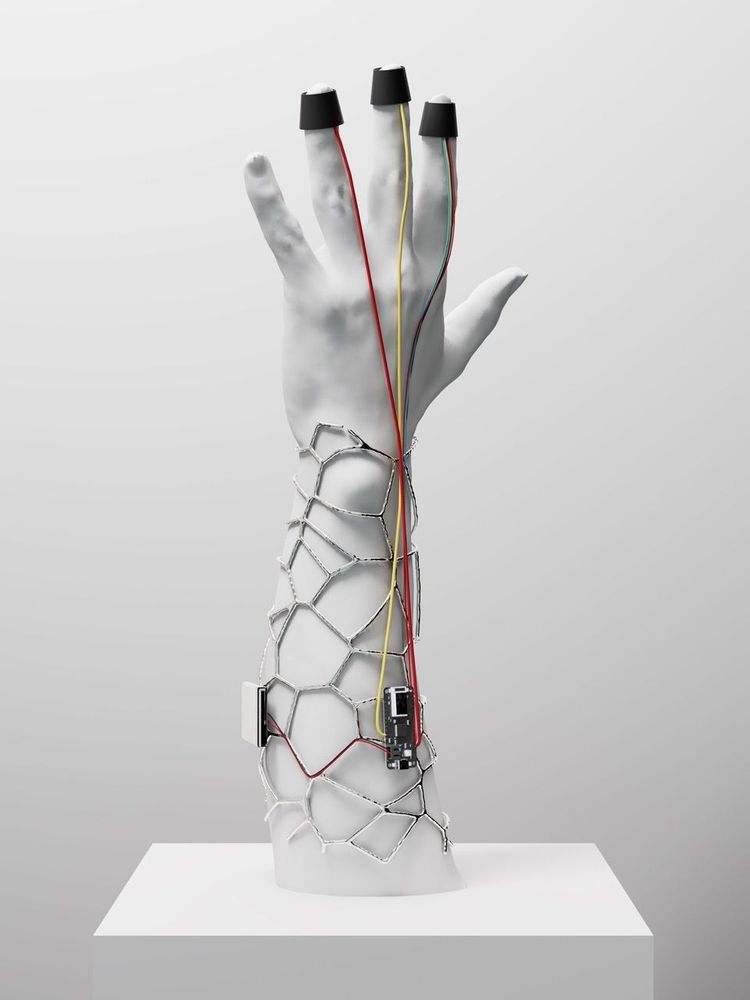

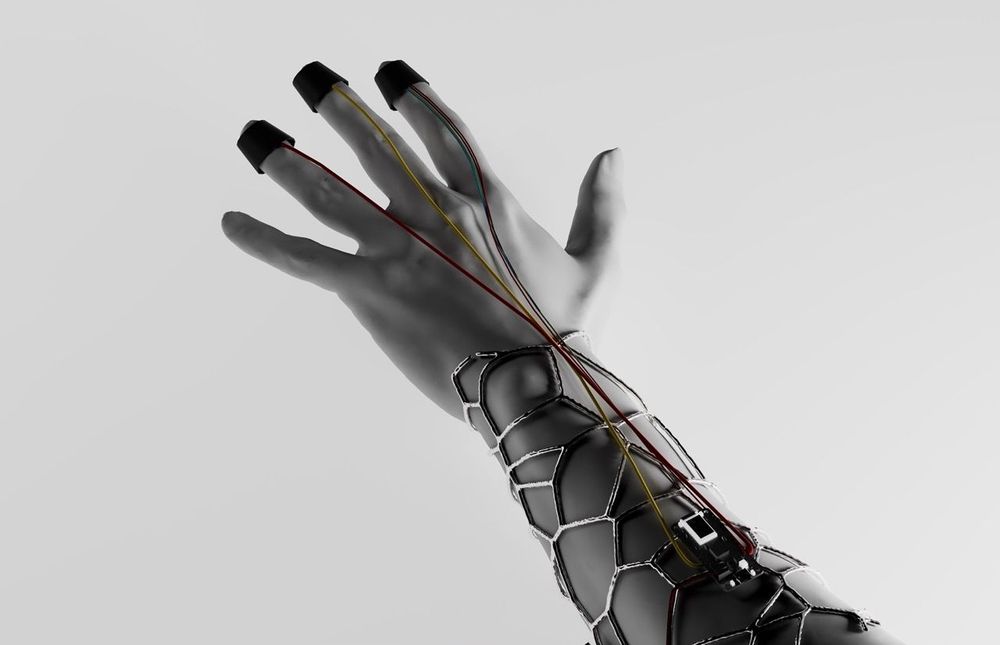

PAIR stands for People Artificial Intelligence Relationship, a service that sets out to shape a new form of interaction. PAIR offers its users a particular accessory, which, thanks to the presence of multiple biological sensors, is able to know one's partner, detect the responses of his physique to external stimuli, and map what is positive, negative, or neutral for him. Studies in affective computing broughts to my attention the concept of affective wereables. Devices that through certain sensors are able to understand the emotional state of the wearer. These researches, combined with numerous other papers, allowed to give birth to a low-fidelity prototype, which through an Arduino board is the presence of a pulse meter, a sensor for blood oxygenation and one for the galvanic response of the skin is actually able to know the biological state of a person.